Partners in Evaluation

Key Takeaways

- Anthropic’s Claude 3.7 Sonnet is the best performing model at 80.6% accuracy, excelling in both semantic and numerical extraction tasks.

- Claude 3.7 Sonnet (Thinking) follows closely at 79.2%, and Claude 3.5 Sonnet Latest gets the third place at 78.1%.

- The benchmark tests the multimodal capabilities of models, as the documents are provided as images, including both computer-written and handwritten parts.

- The best models perform very similarly on the Semantic Extraction task, while the gaps are more pronounced in the Numerical Extraction task. For Semantic Extraction, this is most likely due to some handwritten or poorly scanned documents that are challenging to read for all the models. On the other hand, in Numerical Extraction, the annualized amount due requires calculations that models perform very differently.

Context

The MortgageTax benchmark evaluates the ability of language models to extract information from mortgage tax certificates. Vontive provided both the mortgage documents and the labeled queries, which we used as a foundation for the dataset.

When prompting the models, we provide the documents as images, testing their multimodal capabilities. We test them on two tasks:

- Semantic Extraction: We ask the models to extract the year, the parcel number, and the county from the tax certificate.

- Numerical Extraction: We ask the models to calculate the annualized amount due based on the tax certificate.

For both tasks, the models are asked to reply in a JSON format containing both an explanation and the final answer for each question. All models do not follow this format as precisely, so some regex pattern matching is used to extract the final answers.

The dataset consists of 1258 documents, divided into three sets:

- Public Validation (20 samples): Publicly accessible, available on request.

- Private Validation (300 samples): Available for purchase to evaluate your own models/agents on.

- Test (938 samples): A hold-out set that we never share externally. Only this set is used for the numbers reported on this page.

To receive access to the Public or Private Validation sets, please reach out to us at contact@vals.ai.

Overall Results

The results show a clear divide in performance, with Anthropic’s Claude 3.7 Sonnet leading the pack at 80.6% accuracy. Google Gemini models, particularly Gemini 2.0 Flash Exp, provided a cost-effective alternative at just $0.07/$0.30, performing well in both tasks with 73.0% accuracy.

/

The cost-accuracy graph highlights the trade-offs between performance and cost, with Gemini 2.0 Flash Exp and GPT-4o Mini standing out for their high quality-to-price ratio.

Claude 3.7 Sonnet

Released date : 2/24/2025

Accuracy :

80.6%

Latency :

5.7s

Cost :

$3.00 / $15.00

- Anthropic's Claude 3.7 Sonnet is the best performing model at 80.6% accuracy, achieving first place on both tasks.

- It was slightly more expensive than GPT-4o, but still very reasonably priced.

- It remains to be seen how the other multi-modal model providers will respond to its release.

View Model

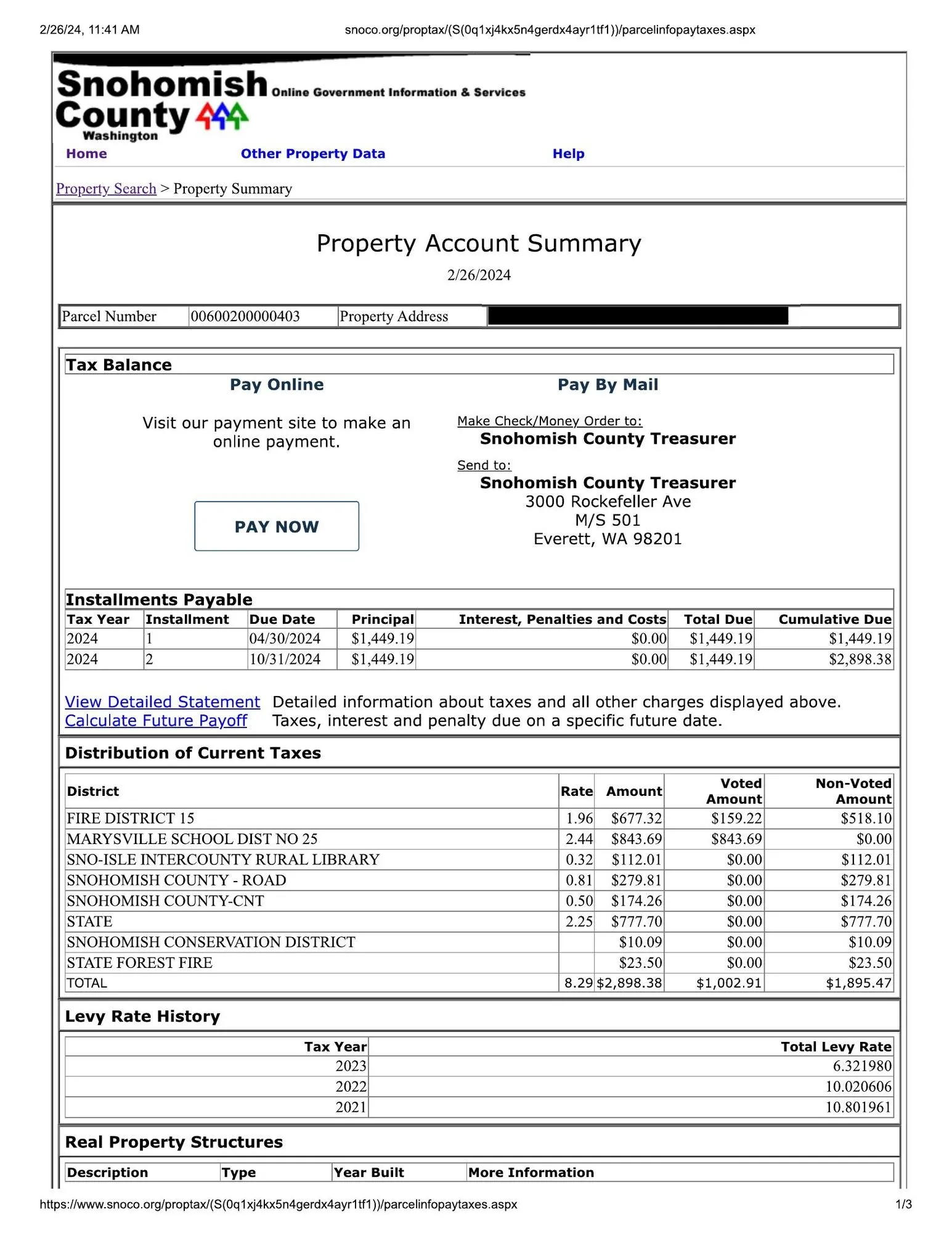

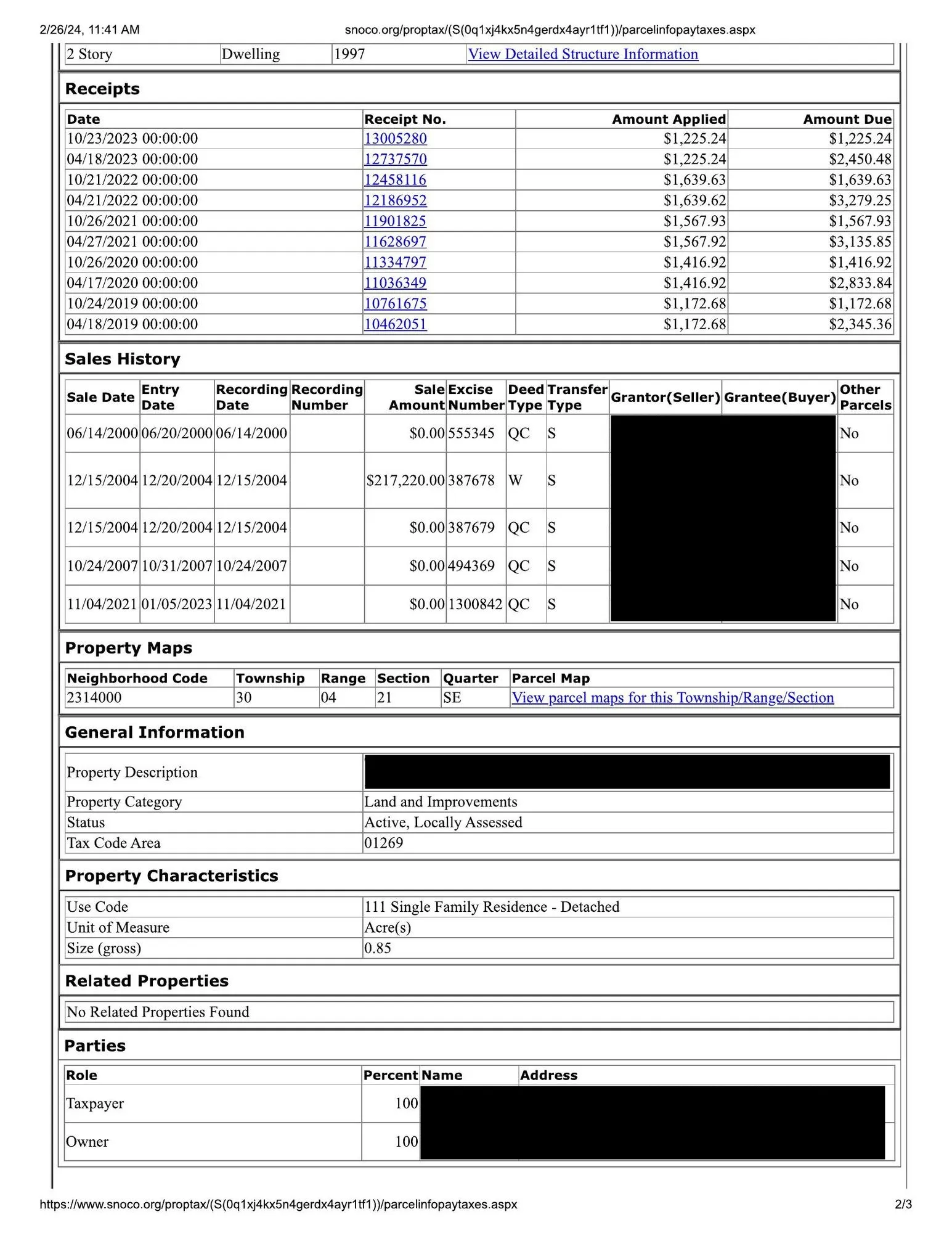

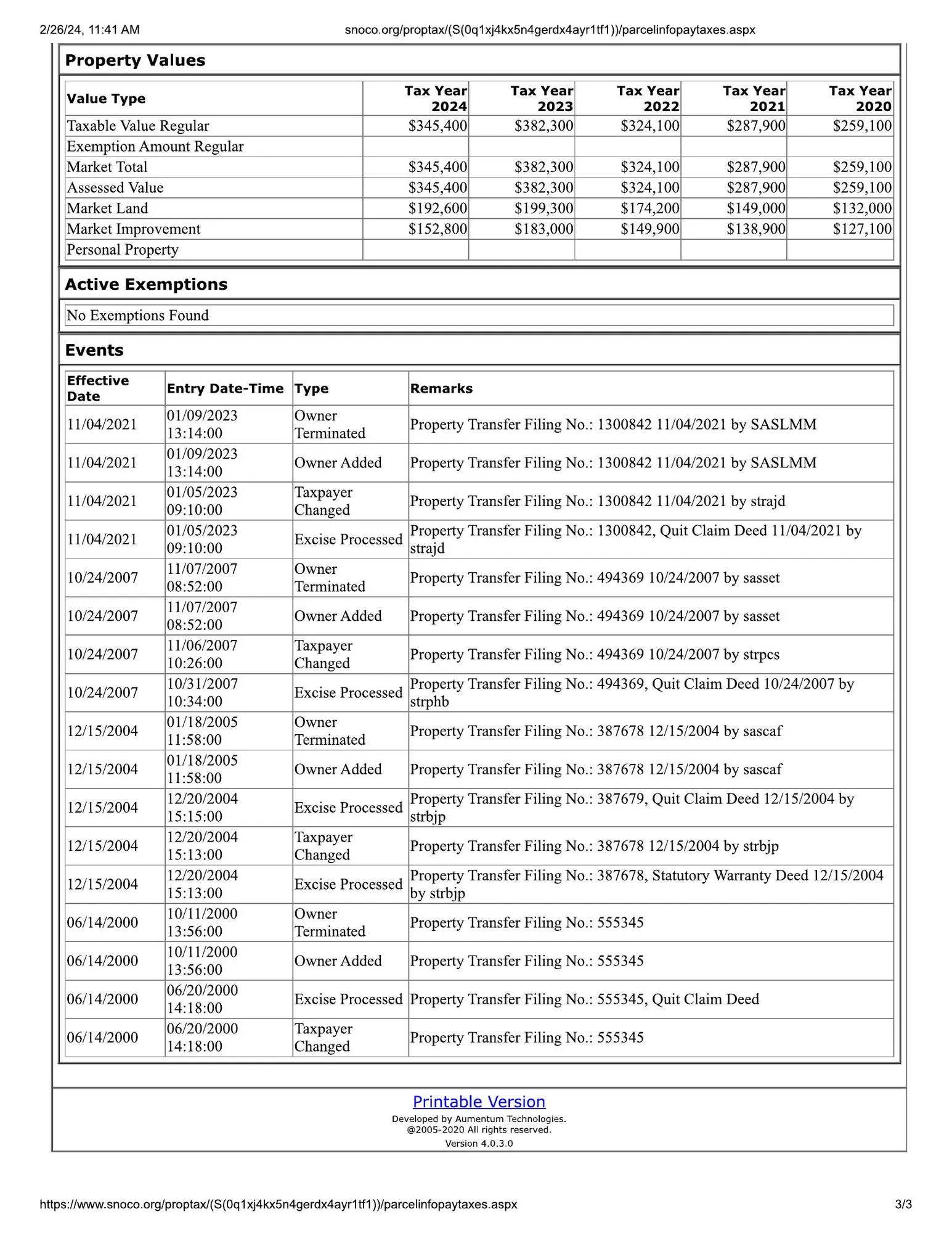

MortgageTax Input Example

Below is a typical example of the images passed to the models. For confidentiality reasons, we redacted the names and addresses of the owners and properties.

Here are examples of the questions asked of the models.

Numerical Extraction:

I will give you a question about a document, that is presented as several images.

You must output your answer in a structured way, strictly writing in the json format below (do not add text before or after writing the json). The answer field must only contain the exact string or number, no explanations.

{

"annualized_amount_due": {

"reasoning": "Reasoning for annualized_amount_due",

"answer": "Answer to annualized_amount_due"

}

}

--START OF annualized_amount_due QUESTION--

What is the total annualized amount of tax due? First look at the payments made and amount due/paid for the prior year. It is possible this is split between the city and county. If there were multiple payments made in the prior year, then the same amount of payments should be expected for the current year. Then find the total amount due for the current year. This number should be within 25% of the prior years total amount due/paid. If the amount due is not within 25% of the prior years total amount due/paid, then we can assume that only part of the total annual amount due has been paid so far. In this case, you should extrapolate the total amount due for the current year based on the number of payments required and the amount paid in each payment. This number should also be within 25% of the total amount due for the prior year. If this is still not within 25% of the total amount due for the prior year, then return null.

--END OF annualized_amount_due QUESTION--

Semantic Extraction:

You must output your answers in a structured way, strictly writing in the json format below (do not add text before or after writing the json). The answer field must only contain the exact string or number, no explanations.

{

"tax_year": {

"reasoning": "Reasoning for tax_year",

"answer": "Answer to tax_year question"

},

"county": {

"reasoning": "Reasoning for county",

"answer": "Answer to county question"

},

"parcel_number": {

"reasoning": "Reasoning for parcel_number",

"answer": "Answer to parcel_number question"

}

}

--START OF tax_year QUESTION--

What is the most recent year of taxes being paid? The year cannot be in the future, so the maximum value should be the current year. If the tax year spans 2 years, return the earlier year. For example, if the tax year is 2023-2024, or 2023-24 return 2023.

--END OF tax_year QUESTION--

--START OF county QUESTION--

What is the name of the county the property is in? Exclude the word 'County' in the answer. This must be a real county name in the United States. If you found a city, return the county the city is located in.

--END OF county QUESTION--

--START OF parcel_number QUESTION--

What is the parcel or map number or APN or tax ID of the property? This is a unique identifier for the property, but is not the address of the property.

--END OF parcel_number QUESTION--